Editor’s Note: A pdf of the complete article is available here.

From: Mercatus Center/George Mason University

Jerry Ellig, James Broughel

more than three decades, presidents have instructed executive branch agencies to use the results of Regulatory Impact Analysis (RIAs) when deciding whether and how to regulate. Scores from the Mercatus Center’s Regulatory Report Card—an in-depth evaluation of the quality and use of regulatory analysis conducted by executive branch agencies— show that agencies often fail to explain how RIAs affected their decisions. For this reason, regulatory reform should require agencies to conduct analysis before making decisions and explain how the analysis affected the decisions.

HOW MUST AGENCIES USE RIAS?

Executive Order 12866 contains several provisions explaining how agencies are to use the results of regulatory analysis:1

- An agency shall adopt a regulation “only upon a reasoned determination that the benefits of the intended regulation justify its costs.” (Sec. 1(b)(6))

- “Agencies should select those approaches that maximize net benefits … unless a statute requires another regulatory approach.” (Sec. 1(a)) (“Net benefit” is the difference between benefits and costs.)

- Agencies shall design regulations “in the most cost-effective manner to achieve the regulatory objective.” (Sec. 1(b)(5))

- “Each agency shall tailor its regulations to impose the least burden on society, including individuals, businesses of differing sizes, and other entities …” (Sec. 1(b)(11))

• For economically significant regulations, agencies shall assess the benefits and costs of alternatives and explain why the proposed regulation is preferable to the alternatives. (Sec.6(a)(3)(C)(iii))

•The executive order recognizes that some benefits and costs may be qualitative rather than quantified or monetized. It also allows agencies to consider values other than benefits or costs, such as distributive impacts and equity. (Sec. 1(a)) 2

HOW DO AGENCIES USE RIAS?

The Mercatus Center’s Regulatory Report Card evaluates the extent to which agencies use RIAs in their regulatory decisions.3 RIAs are a useful tool for regulatory decision making because they allow greater transparency into the thinking and processes that lead to an agency decision. RIAs create greater accountability for regulators’ decisions and ultimately for the outcomes a rule generates. Additionally, RIAs make it more likely that regulators will use society’s scarce resources in an efficient manner. For these reasons, one Report Card criterion asks whether the agency claimed or appeared to use any part of the analysis to guide decisions. Another asks whether the agency selected the alternative that maximized net benefits or, if it chose another alternative, whether it explained its reasons for its choice.

Both of these criteria identify how well the agency followed the process laid out in the executive order. However, a high score does not mean that the evaluators agreed with the agency’s decisions, and a low score does not mean that they disagreed with the decisions. Thus, the Report Card is an evaluation of the degree to which an agency adhered to the requirements laid out in executive orders and OMB guidelines and not a judgment on the efficacy or reasonableness of the regulation itself.

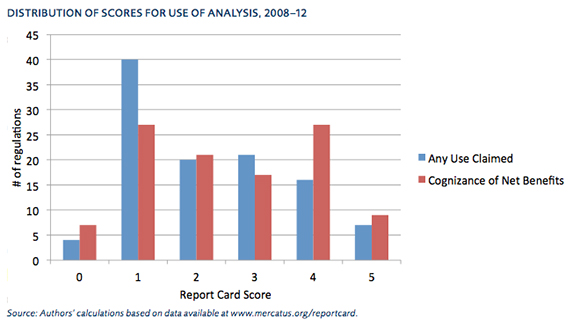

The chart above shows the frequency of each score for 108 prescriptive regulations in 2008–12. Regulations receive a score ranging from 0 (no useful content) to 5 (comprehensive analysis with potential best practices). The results are not encouraging:

- The RIA appeared to affect at least one major decision for only 21 percent of the regulations (score = 4 or 5). There is no evidence that the RIA was used at all for 60 percent of the regulations (score = 2 or lower).

- For 33 percent of regulations, the agency considered the net benefits of all alternatives that the analysis considered, then chose the alternative that maximized net benefits or explained the reason it chose another alternative (score = 4 or 5). The agency neither chose the alternative that maximized net benefits nor explained why it chose another option for 50 percent of the regulations (score = 2 or lower).

BEST AND WORST PRACTICES

Best Practice: Any Use of Analysis

In 2011, the Department of Justice (DOJ) proposed a regulation intended to reduce incidence of rape in America’s prisons. The regulation emerged as a result of the Prison Rape Elimination Act of 2003. The legislation established a commission to study the effects of prison rape and to recommend improvements to prevent it. The law also mandated that the DOJ avoid national standards “that would impose substantial additional costs compared to the costs presently expended by Federal, State, and local prison authorities.” 4 The department commissioned an independent contractor to perform a cost analysis of the commission’s recommended standards.

In the RIA for the rule,5 DOJ did not estimate how much its proposed standards would reduce prison rape. How- ever, the department performed a breakeven analysis that began by estimating the value to society of reducing the prevalence of prison rape and sexual abuse by 1 percent. The department did this by estimating the monetary benefit of avoiding prison rape, a number determined by consulting relevant literature on the costs of prison rape. Costs of the proposed regulation were then compared to theoretical reductions in order to deter- mine what level of reduction would justify the costs.

As a result of the breakeven analysis, DOJ estimated the standards would only have to yield a small percent reduction from the baseline level of prison rape in any given year, without even considering benefits that were unquantifiable, in order to justify the regulation. DOJ found its proposal to be more cost-effective than the commission’s recommendations, for which the agency also did a cost-effectiveness analysis. While analysis of net benefits was absent in the RIA, the agency still made good use of the information it had available and clearly referenced the economic analysis as a reason for modifying some of the commission’s recommendations to carry out the law’s directives.

Best Practice: Maximizing Net Benefits

Also in 2011, the Department of Transportation issued a regulation mandating use of electronic on-board recorders on commercial motor vehicles. In the RIA for the rule,6 DOT explicitly stated that it chose the alternative that maximized net benefits. DOT analyzed

three alternatives against multiple baselines and chose the alternative that produced the highest net benefits against each of the baselines. The first baseline reflected the level of noncompliance under current regulations, while the alternative baselines reflected proposals considered under another rule DOT was considering simultaneously.

Unfortunately, the range of alternatives considered was not very broad, since the alternatives differed only with respect to who is subject to the regulation. Each option required the use of electronic on-board recorders on certain commercial motor vehicles. The options only varied in terms of which vehicles would be subject to the regulation. Thus, it is not clear if the regulation maximized net benefits compared to all possible alternatives, but DOT clearly indicated how the net benefit calculations affected the choice among the alternatives that were considered.

Best Practice: Explaining Factors Other Than Net Benefits

In 2012, the Department of Agriculture (USDA) proposed a regulation to modernize its system of poultry slaughter inspection. The rulemaking came as a result of President Obama’s Executive Order 13563 requiring executive branch agencies to review existing rules. The goal was to have agencies assess “rules that may be outmoded, ineffective, insufficient, or excessively burdensome, and to modify, streamline, expand, or repeal them in accordance with what has been learned.” 7 In response to this executive order, USDA reviewed its poultry slaughter inspection system to see if it could identify ways to increase efficiency and improve safety.

Shortly thereafter, USDA completed a qualitative risk assessment measuring how different inspection procedures affect the prevalence of human illnesses associated with tainted poultry.8 USDA determined that its resources could be better utilized if it allowed establishments to sort out more potentially tainted birds prior to inspections, allowing USDA to concentrate more of its own resources on verifying compliance and sanitation standards.

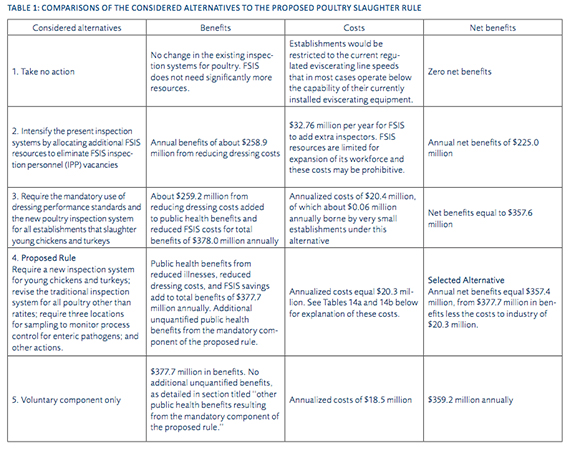

Using information garnered from the risk assessment, USDA conducted a benefit-cost analysis for several alternative ways of modernizing its poultry inspection system.9 One of the striking aspects of this RIA, as demonstrated in Table 1 below, is the small difference in the net benefits between the alternatives.

One alternative (alternative 3) with slightly greater net benefits than the alternative that was ultimately chosen in the proposed rule was rejected due to the disproportionate impact it would have on small businesses relative to larger firms. USDA determined the alternative embraced by the proposed rule would not affect small business in a disproportionate way. Another alternative (alternative 5) was dismissed even though it had higher net benefits than the proposed rule because USDA determined the alternative selected in the proposed rule had additional, unquantified benefits.

Worst Practice: The Black Hole

All too often, the worst practice is a complete absence of content. For a majority of the regulations,

agencies made no claim to use the analysis, and there is no evidence in the NPRM that the agency took the analysis into account. Numerous regulations also simply lacked information on the net benefits of multiple alternatives—or any information on net benefits at all.

CONCLUSIONS

Based on the content in NPRMs and RIAs, agencies appear to routinely ignore Executive Order 12866’s mandate that they use the results of RIAs to inform their decisions. In many cases, the analysis is not complete enough to inform decisions, or agencies failed to even claim to have used it.

At a minimum, this is a significant transparency problem. Perhaps regulatory analysis does inform agency decisions during the course of internal deliberations. If so, the public record often fails to document this influence.

Alternatively, NPRMs and RIAs may fail to document the influence of analysis on decisions because agencies made major decisions before conducting or completing the analysis. Previous research on regulatory impact analysis suggests that this occurs frequently.10

In either case, current regulatory institutions fail to hold agencies sufficiently accountable for explaining how their analysis informs their decisions. Regulatory reform could improve accountability by (1) requiring agencies to conduct and publish preliminary RIAs before making regulatory decisions, including the deci- sion on whether to regulate in the first place, and (2) requiring agencies to document how the RIA informed their decisions.11

ENDNOTES

- Exec. Order No. 12866, 58 Fed. Reg. 190 (October 4, 1993): 51,735–51,744.

- President Obama’s Executive Order 13563 adds several other values that may not be benefits or costs, such as fairness and human dignity. See Exec. Order No. 13563, 76 Fed. Reg. 11 (January 21, 2011): 3,821.

- The Report Card methodology and 2008 scoring results are in Jerry Ellig and Patrick McLaughlin, “The Quality and Use of Regulatory Analysis in 2008,” Risk Analysis 32, no. 5 (May 2012): 855– 80. The Report Card is an ongoing project evaluating the quality of regulatory analyses that agencies conduct for major regulations. Scores for all regulations evaluated in 2008 and subsequent years are available at www.mercatus.org/reportcard.

- Prison Rape Elimination Act of 2003, Pub. L. No. 108-79, Stat. 972 (2003).

- US Department of Justice, Proposed National Standards to Pre- vent, Detect and Respond to Prison Rape Under the Prison Rape Elimination Act: Initial Regulatory Impact Analysis for the Proposed Regulation (January 2011).

- US Department of Transportation, Electronic On-Board Recorders and Hours-of-Service: Preliminary Regulatory Evaluation (January 2011).

- Exec. Order No. 13563, 76 Fed. Reg. 11 (January 21, 2011).

- United State Department of Agriculture, FSIS Risk Assessment for Guiding Public Health-Based Poultry Slaughter Inspection (November 2011).

- United States Department of Agriculture, Modernization of Poultry Slaughter Inspection, 77 Fed. Reg. 18 (January 27, 2013).

- Richard Williams, “The Influence of Regulatory Economists in Federal Health and Safety Agencies” (Working Paper No. 08-15, Mercatus Center at George Mason University, Arlington, VA, July 2008), http://mercatus.org/sites/default/files/publication /WP0815_Regulatory%20Economists.pdf; Wendy E. Wagner, “The CAIR RIA: Advocacy Dressed up as Policy Analysis,” in Reforming Regulatory Impact Analysis (Washington, DC: Resources for the Future, 2009): 57.

- Richard B. Belzer, “Principles for an Effective Regulatory Impact Analysis Challenge Function,” Policy Horizons Canada, Horizons 10, no. 3 (May 2009), http://www.horizons.gc.ca/page .asp?pagenm=2009-0014_07.